A few years ago I was shocked to find you that the transistor had been invented far earlier than the time given in most of the textbooks. I looked into this and discovered that many of the inventions we take for granted weren’t invented by the person usually associated with the invention. I wrote this paper for a Frontiers in Education conference.

Honesty in History

Abstract -

Index Terms – Computer science education, Curriculum development, Ethics, History of computing.

Introduction

This paper demonstrates that the history of computing and engineering in some undergraduate texts is inaccurate and misleading. We look at specific instances of inaccuracy and explain why it is necessary for the academic community to adopt a more rigorous approach. If I were to tell my students that the square root of nine was 4 or that microprocessors were made of plutonium, students would be outraged by such travesties of the truth. However, few would notice if I were to make equally erroneous and outlandish statements about the history of computing.

This paper looks at the way in which myths are perpetuated in engineering history, and how history is sometimes simplified to a point where statements in textbooks become grossly misleading. We appeal to academics to avoid superficial sources when preparing material on the history of computing and to examine their reference material as if they were researching any other topic. Honesty and integrity are the foundations of the academic world. So why do we make an exception for the teaching of the history of technology and tolerate breathtaking levels of inaccuracy?

We look at the origin of major inventions such as the telephone and wireless, and

demonstrate how inventions are inevitably the result of a long process over time

and of clusters of near-

The history of computer science is important because it demonstrates how computing has grown and evolved. An appreciation of history is important in research; we need to understand how products and systems develop and what drives their evolution. A knowledge of history helps us to avoid repeating the mistakes of others.

INACCURACY IN Computer History

A paper that had a profound effect on how I view computer history was “Historical Content in Computer Science texts: A Concern” by Kaila Katz. She demonstrated how historical material in popular mainstream texts was sometimes wildly inaccurate. Some of the inaccuracies she identified are:

Anachronisms – the use of terminology that was not current at the time of the device being described (e.g., the use of software when referring to Babbage’s analytical engine or describing Ada King as a programmer).

Unfounded assumptions – bland assumptions that go unchallenged.

Errors – statements that are demonstrably incorrect.

As well as actual errors and misconceptions, the history of computer science can be described by the pejorative term Whig History; that is, a form of history that demonstrates an unbroken trend of development from the past to the present. Whig history deals in heroes and often neglects developments that were failures. It is often from its failures that society learns most.

We now examine five inventions in computer science and electronic engineering. In each case we demonstrate that the inventor named in much popular literature is not necessarily the actual inventor.

What should be included in Computer History?

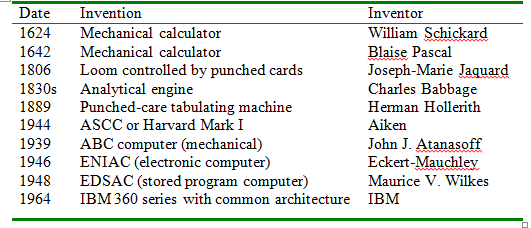

Table 1 is taken from the CC2001 computing curriculum [1] which was the first such

work to include the history of computing as a formal topic. Even today few schools

teach a course on computer history. CC2001 specifies a minimum of one hour of computer

history and divides computing into a pre-

The history of computing hardware (this paper does not look at software and networking),

is processor-

If I ask my students what the most important developments in computing over the last

few years have been, they usually respond by listing some of the milestones in chip

design such as multithreading and Intel’s dual/quad core technologies. I disagree,

suggesting instead that the rise of the simple but elegant USB serial bus (together

with plug-

TABLE I

CONVENTIONAL SEQUENCE OF EVENTS

SP1. History of computing [core]

Minimum core coverage time: 1 hour

Topics:

Prehistory—the world before 1946

History of computer hardware, software, networking

Pioneers of computing

Learning objectives:

1. List the contributions of several pioneers in the computing field.

2. Compare daily life before and after the advent of personal computers and the Internet.

3. Identify significant continuing trends in the history of the computing field.

In the next five sections we take a brief look at inventions that are of prime importance in our society and demonstrate that, in each case, the invention was not entirely the sole work of the pioneer associated with the invention. These notes demonstrate that educators are not providing a sufficiently accurate record of the history of engineering.

Invention 1 – The Computer

The invention of the computer extends over more than 2,000 years. Some texts provide a poor account of the computer’s history because they are highly selective in the way in which they describe milestones in the history of the computer. In particular, they do not distinguish sufficiently between the stages of the computer’s development; all too often, they concentrate on personalities rather than ideas. We can briefly define the stages in the development of the computer as:

Mechanical Calculator

The development of the mechanical calculator is attributed to Pascal and Schickard who created machines that automated addition and subtraction and later multiplication (this explanation omits the abacus and Napier’s bones for reasons of space). These machines used decimal arithmetic and mechanical components such as cog wheels, gear chains and levers. They demonstrated that arithmetic calculations could be automated.

Mechanical Computer

The mechanical computer was proposed by Charles Babbage in the 1830s. This computer was never constructed due to financial and engineering limitations. However, it can be called the first computer in the sense that it was able to read a program and to perform a set of operations. The program and data were to be stored on punched cards.

Electronic Analog Computer

The electronic analog computer performs calculations by modelling a system electronically; that is, a circuit behaves like the system under investigation. For example, you find the terminal velocity of a parachutist by modelling the parachutist’s acceleration due to gravity and the retarding effect due to wind resistance. Analog computing did not lead to digital computing, but it did accelerate the development of electronics.

Electro-

The electro-

Electronic Calculator

The early electronic computers replaced the mechanical components of the electro-

Electronic Computer

The electronic computer is a version of Babbage’s analytical engine implemented electronically. That is, it can read the instructions of a program from memory, execute the instructions, and store the results. Several computers claim to be the world’s first computer as we know it today (EDVAC, EDSAC, Manchester Mark 1).

Conclusion

These brief notes demonstrate that the development of the computer is a continuum from the simple mechanical calculator to the computer as we know it today. How you assign a name to the inventor of the computer depends on which milestone in the computer’s history you regard as most significant.

Invention 2 -

No single invention is more responsible for the computer age than the transistor that lies at the heart of amplifiers and logic circuits. Today’s ability to put thousands of millions of transistors on a single chip makes it possible to construct tiny computers and large memory systems.

I used Google to search for the invention of the transistor and found the following:

“The scientists that were responsible for the 1947 invention of the transistor were:

John Bardeen, Walter Brattain, and William Shockley. Bardeen, with a Ph.D. in mathematics

and physics from Princeton University, was a specialist in the electron conducting

properties of semiconductors. Brattain, Ph.D., was an expert in the nature of the

atomic structure of solids at their surface level and solid-

In 1956, the group

was awarded the Noble Prize in Physics for their invention of the transistor.”

No one would dispute that Bardeen, Brittain and Shockley at Bell Labs were responsible for the development of the transistor and today’s electronics industry. However, the story of the transistor’s origin is more complex. A strong claim to the invention of the transistor belongs to Julius Edgar Lilienfeld.

Lilienfeld received a PhD in physics in Berlin in 1905. He was an accomplished physicist

who worked on separating gas mixtures, cryogenics, and x-

In 1926 Lilienfeld made a US patent application for a device that “related to a method of and apparatus for controlling the flow of an electrical current between two terminals of an electrically conducting solid by establishing a third potential between said terminals.” [6].

Lilienfeld proposed the field effect transistor, a device that is closer to today’s transistors than those constructed at Bell labs. A brief literature search reveals that Lilienfeld’s claim is now widely supported, although there is no direct evidence that he created a working transistor.

Given that Lilienfeld’s claims to the design of the transistor appear undisputed, it seems surprising that so few texts refer to his invention. Moreover, this omission fuels the suspicion that an invention created in the labs of a major corporation, is more likely to be noticed.

Invention 3 – The Microprocessor

The microprocessor is a computer on a chip and was invented at Intel in 1970 by a team led by Ted Hoff. The microprocessor was an inevitable invention that would have been made even if Intel had not been the first company to pass the winning post. The notion of the microprocessor existed, the need for a microprocessor existed, and semiconductor manufacturing was rapidly advancing in the late 1960s to the point at which a microprocessor was possible (the enabling technology of the integrated circuit had been invented by Noice and Kilby in 1958).

In 1969 Intel was a new company making semiconductor memories. Busicom, a Japanese

company, asked Intel to make a desktop calculator. Initially, the calculator was

to be constructed from about 12 discrete chips. Ted Hoff decided to use Intel’s advanced

technology to construct a single-

Intel also created an 8-

Although much literature credits Hoff and Faggin with the construction of the world’s first microprocessor, there are competing claims. For example, Ray Holt claims to have invented the microprocessor in 1969 while working for the Garrett AiResearch Corp on a contract from Grumman Aircraft. Holt claims to have invented a microprocessor for use as a control device in the F14A jet fighter and maintains that military secrecy prevented him from receiving the credit for the microprocessor. Furthermore, Holt claims a 1971 paper that was submitted to Computer Design magazine was not printed until 1998 for reasons of security. Holt’s chip set does not implement a programmable microprocessor as we know it today – it is closer to a microprogrammed device that is dedicated to a specific task.

In 1990 Gilbert Hyatt was granted a patent for a Single Chip Integrated Circuit Computer

Architecture (after a 20-

Irrespective of the actual invention of the microprocessor, by concentrating on backward

compatibility (allowing new processors to run old software) and cutting-

Conclusion

The invention of the microprocessor is claimed by several inventors. The inventor

who won the patent is not the inventor who won the lion’s share of the market and

fame in the textbooks. Does it matter? The history of the microprocessor demonstrates

that it was inevitable; however, Intel’s domination of the market led to the emergence

of the IBM PC and that led to the IBM-

Invention 4 – Radio

Of all the major inventions based on electronics, the invention of the radio is probably the most contentious. Radio was, of course, discovered rather than invented. The existence of radio waves was first predicted by the Scottish mathematician James Clerk Maxwell. Radio waves themselves were first created and detected by Heinrich Hertz in 1887. Consequently, you could say that radio waves were discovered by Maxwell and that the radio was invented by Hertz.

However, Hertz’s work was not immediately of practical value. What was needed was

a means of reliably generating radio waves, using them to carry information (modulation

to carry either Morse code or speech/music), filtering waves of different frequency

(to allow multiple transmitters), and amplification (to allow reliable long-

Traditionally, the invention of wireless is attributed to Gugliemo Marconi, who was

born in Italy and emigrated to England in 1896 at the age of 21. Marconi was familiar

with Hertz’s work and used a telegraph and spark transmitter to generate radio waves

together with a device called a coherer to detect them. Neither the spark transmitter

nor the coherer were invented by Marconi. After gradually increasing the range of

his transmitter, Marconi announced that he had managed to transmit across the Atlantic

in 1901. This spanning of the Atlantic by radio made Marconi a household name. Although

Marconi used the spark-

A brief glance at the literature on the development of wireless will reveal that Marconi was not the only player in this field. Consider:

- Edouard Branly In 1890 the French inventor Branly invented the coherer, a device that could detect radio waves. The coherer consisted of a tube of iron filings that stuck together and conducted in the presence of radio waves.

- Nikola Tesla In 1896 Tesla transmitted signals over 30 miles in the USA. He filed a US patent on the transmission of electrical energy in 1900, although he had filed patents on his enabling technology as early as 1896.

- Oliver Lodge Lodge improved Branly’s coherer and publicly demonstrated the transmission of radio waves in 1894 – a year before Marconi. Lodge was issued with a patent on wireless telegraphy in 1898.

- Alexander Popov Popov was a Russian who conducted experiments with radio from the 1890s; he further refined Lodge’s coherer and used it to detect lightning strikes at a distance from the radio waves that they generated. Popov demonstrated wireless telegraphy in 1896 and achieved transmission distances of 20 miles by 1899. Because of the Cold War, Popov’s achievements were championed by the Soviet Union and denigrated by the west.

- Jagadish Chandra Bose Bose was born in India and demonstrated radio transmission in 1894 – ahead of Marconi. He also improved the design of the coherer in 1899 by using an iron disk separated from a layer of mercury by a very thin film of oil.

- Reginald Fessenden The Canadian Fessenden pioneered continuous wave technology (unlike Marconi’s pulsed wave technology of his spark gap transmitters). It was Fessenden who made the first sound broadcast in 1900 and the first music broadcast in 1906.

- John Ambrose Fleming Although British engineer Fleming is better known for his invention of the diode vacuum tube (that was later to form the basis of the three electrode triode amplifying device), he developed the transmitter used by Marconi to generate the high power radio waves that spanned the Atlantic in 1901.

Conclusion

Wireless technology has shaped the modern world. However, it is in this area that

inventors and their supporters make more claims and counter claims than almost anywhere

else. Although Marconi was an experimenter and an entrepreneur, it seems to me that

his claims to be the inventor of radio are not necessarily stronger than those of

other pioneers such as Tesla. Even Marconi’s claim for spanning the Atlantic rests

on the design of the high-

Does it matter who we call the inventor of radio? The problem is that the naming of one person above all others obscures the large number of radio pioneers who nearly simultaneously made major contributions to the development of wireless technology.

Invention 5 – The Light bulb

Although the invention of the light bulb is not connected with the computer, it is indicative of the way in which the history of an invention is distorted. In this case, the man most closely associated with the light bulb in the public’s mind did not invent it. Consider the following quote [8]: “Thomas Alva Edison lit up the world with his invention of the electric light. Without him, the world might still be a dark place.”

This quote not only credits Edison as the inventor of the light bulb, but also implies that without Edison we would have no electric lighting; a preposterous claim. Even worse, a website called ‘The Lemelson Center’ at http://invention.smithsonian.org/home/ with the banner ‘The Lemelson Center is dedicated to exploring invention in history and encouraging inventive creativity in young people’ presents the following text. ‘Thomas Alva Edison changed our world! His genius gave us electric lights in our home and an entire system that produced and delivered electrical power… Of course, Edison's most famous invention to come out of Menlo Park was the light bulb… ’ Coming from such an authoritative source, this statement is seriously misleading.

Electric lighting has a far longer history than many sources imply. The English chemist Humphrey Davy demonstrated the carbon arc light as early as 1809. This created an intense beam suitable for use in search lights but of little use for domestic or street lighting.

Warren de la Rue invented an incandescent light bulb in 1820 with a platinum filament

in a glass bulb with the air removed. However, it was impracticable because of the

then difficulty of maintaining a good vacuum seal. Joseph Swan in England invented

and demonstrated the carbon-

Edison demonstrated his carbon filament light bulb in 1879 and produced bulbs using carbonized bamboo filaments in 1890 (Edison tested over 6,000 possible filaments). Edison’s principal contribution is his methodical experimentation, testing, and commercial control of all aspects of light bulb production.

Edison’s carbon filament bulb had a relatively short life and Karl Auer von Welsbach in Austria tried Osmium in 1898. It was not until 1906 that the tungsten filament was introduced by the General Electric Company. Tungsten has ideal properties for a filament because of its high melting point and ductility. The only significant difference between today’s bulbs and those of 1906 is that we now fill the bulb with an inert gas such as argon to reduce the filament’s evaporation.

Conclusion

Of all the inventions discussed in this paper, it appears that the development of

the incandescent light bulb is the most widely misreported in popular literature

and web sites. Edison made very important contributions to the development of electric

lighting. However, he neither invented the concept nor created the first light bulb

and his light bulb was rapidly rendered obsolete by the tungsten filament. By solely

concentrating on Edison, many articles on the history of the light bulb fail to give

readers an insight into the long and complex process of invention. In particular,

they omit the nature of multiple near-

Ways Forward

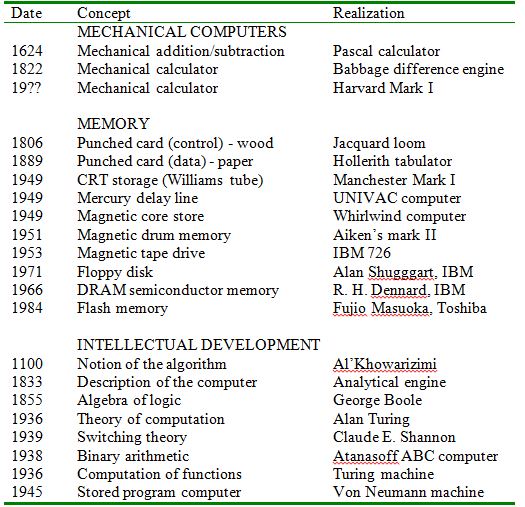

A better way of teaching the history of computing and other engineering concepts might be to decouple the invention from the inventor; that is, instead of providing a list of major inventors, it may be better to provide a list of the intellectual steps and the various implementations between the earliest beginnings and the final products.

The following examples are indicative and are not intended to be complete – they demonstrate only the concepts involved and do not provide full examples. Table 2 illustrated a conventional presentation of computer history with a chronological list of dates and the corresponding inventions.

Table 3 demonstrates an alternative approach in which events are grouped according to their category. In this example, we have grouped together the development of mechanical computers, and memory technology.

Table 3 also includes intellectual development; for example, Claude Shannon’s development of switching theory (a practical extension of Boolean algebra) provided the foundation for the use of binary arithmetic and all digital circuit design.

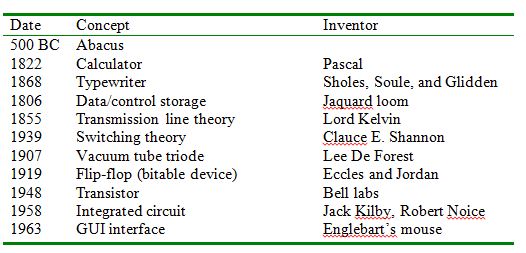

Table 4 demonstrates another way of looking at the history of computing; this time from the point of view of the development of enabling technologies. Here the technologies that were required in order to implement computers are described. All too often some of these technologies are omitted in conventional computer histories; for example, the laying of the first transatlantic telegraph cable led to surprising results – rapidly keyed signals at one end were received as slowly varying signals at the other. Thompson (later Lord Kelvin) was given the problem to solve and he laid the foundation for the field of electronics and the behaviour of signals in circuits. Similarly, the development of the vacuum tube was needed to construct electronic computers.

TABLE 2

Conventional sequence of events

TABLE 3

Computer Development by Concept

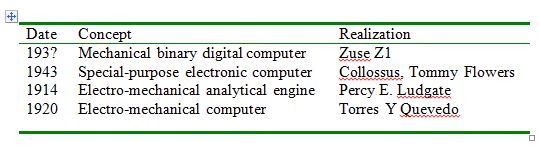

Table 5 looks at the history of computing from the point of view of dead end projects

and ideas that never directly affected the outcome of computing. Two famous cases

are Konrad Zuse’s Z1 computer, that was invented in war-

Brian Ranlell describes two interesting computer pioneers that are not well known

and whose inventions can be said to have led to a dead end [5]. Percy Ludgate designed

an analytical engine using electro-

TABLE 4

Computer Development by Enabling Technology

TABLE 5

Dead-

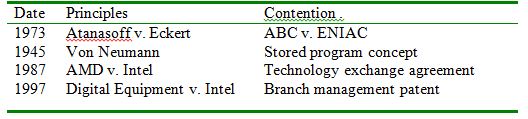

Table 6 looks at yet another way of organising computer history: by means of disputes within the computer industry. This brief example mentions the Atasanoff v. Eckert dispute that ultimately awarded Atanasoff the patent to the first computer because he had been visited by Eckert. Atanasoff argued that Eckert’s later success was derived from his original ideas.

Another interesting dispute involved John von Neumann. Although he is often regarded as the father of the stored program computer, some believe that the ideas expressed in the First Draft Report on the EDVAC that carries his sole name are those of Eckert and Mauchly, and that the actual author of the report was Herman Goldlstine [8].

TABLE 6

Litigation and Disputes

One modern factor that does provide hope for an improvement in the teaching of computer (and engineering) history is the emergence of wikis, particularly Wikipedia. The Wikipedia allows errors of omission (or honest misunderstanding) to be rapidly corrected. While writing this paper I found, post hoc, that Wikipedia provided information with a high level of accuracy.

Conclusions

This paper is called truth in history because, in higher education today, undergraduates are not always given an accurate history of the origins of computer science. We point out two areas that are most deficient: the first is the general accuracy of some of what has been written. The second is the omission of much important detail that is necessary to give students a fair view of the evolution of computing.

The final section of this paper has tentatively suggested alternative ways of looking at computer history; not in terms of heroes but in terms of the development of concepts or of the enabling technologies. We have also suggested that looking at areas such as disputes within the computer industry and inventions and discoveries that did not achieve success can also provide suitable themes for discussion in the classroom.

References

[1] Kaila Katz, “Historical Content in Computer Science texts: A Concern” IEEE Annals of the History of Computing, Vol. 19, No. 1, 1997

[2] Computing Curricula 2001 – Computer Science, The Joint Task Force on Computing Curricula, IEEE Computer Society and Association for Computing Machinery.

[3] “Those who forget the lessons of history are doomed to repeat it”, IEEE Annals of the History of Computing, Vol . 18, No, 2, 1996

[4] B. Randell, “The history of digital computers”, The Institute of Mathematics and its Applications. Springer Verlag, Berlin, 1973.

[5] B. Randell, “From Analytical Engine to Electronic Digital Computer: The Contributions of Ludgate, Torres, and Bush”, IEEE Annals of the History of Computing, Vol. 4, No.4, October 1982.

[6] IEEE-

[7] http://depts.gallaudet.edu/englishworks/exercises/exreading/topic1a.html

[8] “From the Editor’s Desk”, IEEE Annals of the History of Computing, Vol . ?, No,

?, July-